This is a logic puzzle that combines Masyu with two games that are played on the board shown in the puzzle, Go and Gomoku. In this puzzle, your objective is to fill out the board according to Masyu rules with additional rules that are thematic to those two games. When filling out the Masyu, the additional stones given to you suggest that you need to place these additional stones. You need to place additional stones along the path such that every cell on the path that could contain a black or white circle/stone according to Masyu rules does contain a black or white circle/stone. The additional rules you must adhere to are:

- You cannot place stones such that some stones would be captured and removed from the board as per Go rules.

- You cannot place stones such that 5 or more stones would form a straight line, the game ending condition of Gomoku.

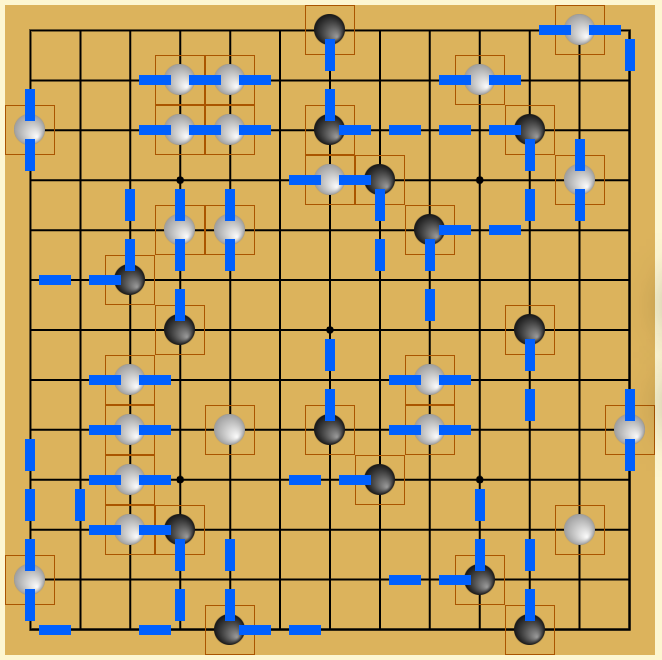

Step 1: Do everything you can using normal Masyu rules. (You might be able to get further than this, but this is sufficient to demonstrate how you might come across the other rules.)

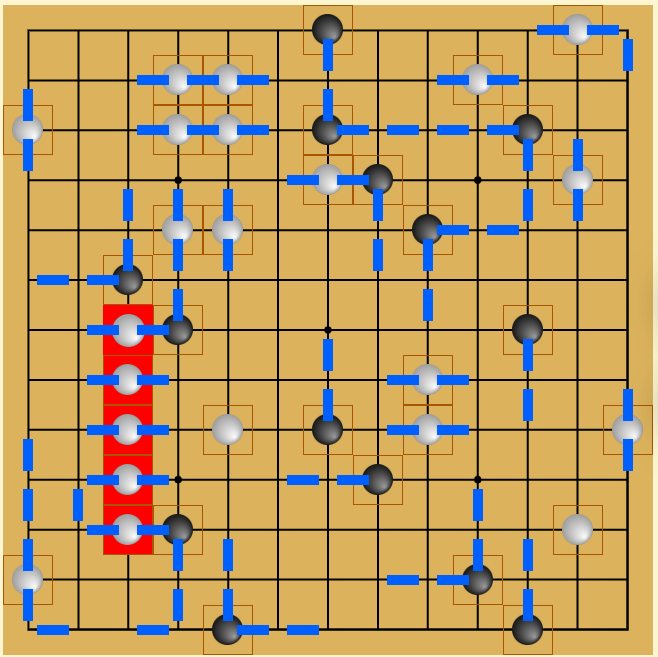

You might realize that the bottom left corner is ambiguous because you can either do

Option 1:

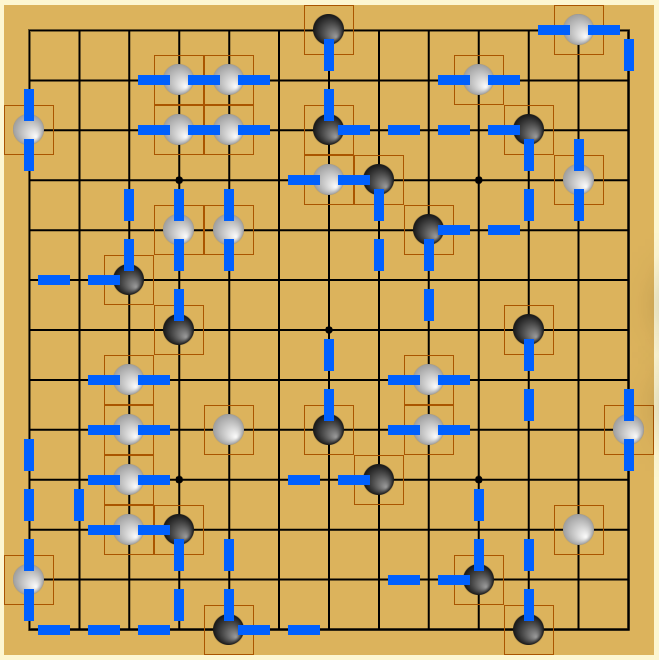

or

Option 2:

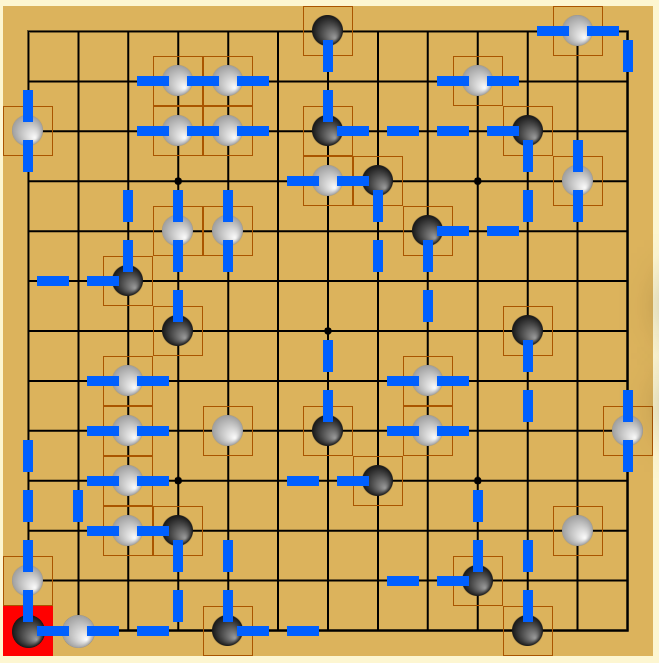

This should clue you in that something else is going on. You might also figure it out because of the Go board or if you just start randomly clicking around, you will notice that sometimes you trigger certain stones or edges to be highlighted red. This is when you have violated one of the rules (Masyu, Go, or Gomoku).

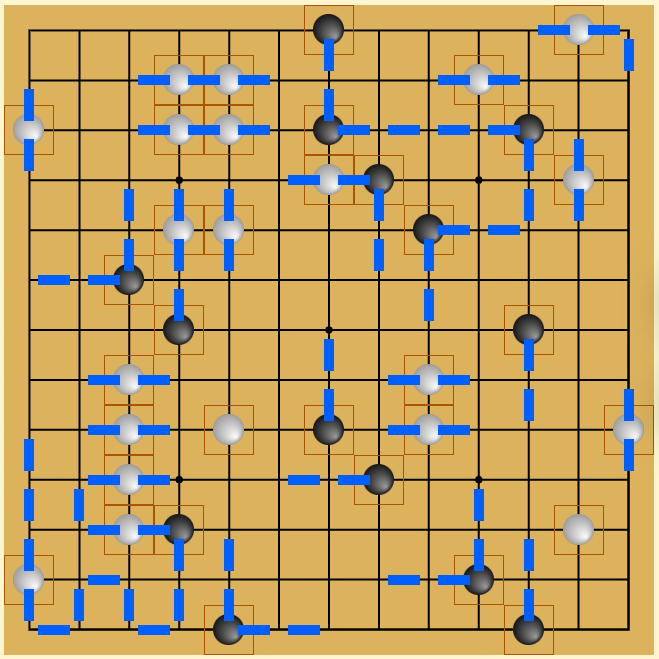

Using the Go rules, you can figure out the bottom left corner must be Option 2 because Option 1 traps a black piece in the bottom left and violates the Go rules.

Similarly, the path cannot go left from the black stone on the left side of middle row or else you would add another white stone creating 5 whites in a row.

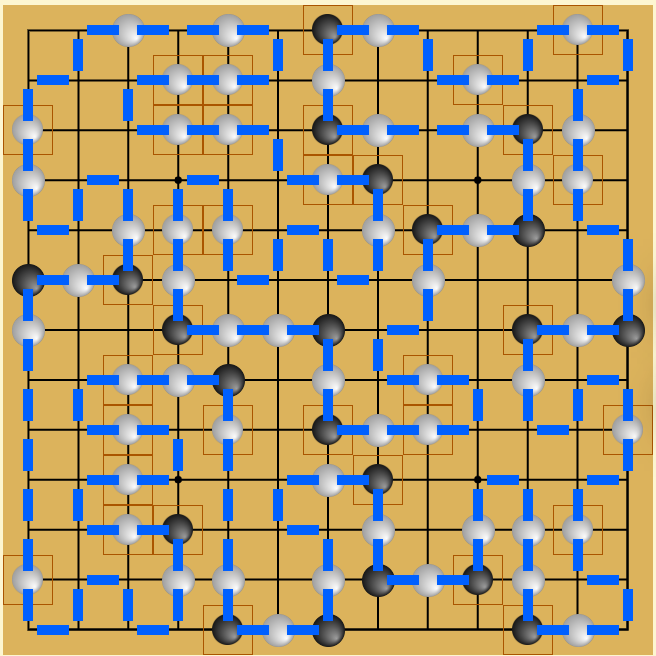

Using pieces of logic like this, you can solve the rest of the board.

Note that this puzzle is uniquely solvable given the initial state with just the following rules

- Standard Masyu rules

- You must place a white or black circle/stone wherever one can be placed according to Masyu rules

- You cannot capture any pieces as per Go rules

- You cannot form a 5-in-row (Gomoku)

Notably, the number of additional stones placed is not required to uniquely solve the board. However, they are provided to make solving a bit easier and also encourage solvers to actually place additional pieces wherever they can be placed.

Puzzle Construction

Go and Nikoli-style puzzles seem like such a perfect fit. They both have grids and they both have black and white circles/stones placed in some but not all positions on the board. I also played Gomoku growing up and thought that would add another interesting aha. I’ve sat on this idea for a while since I could never come up with a reasonable way to extract an alphabetic answer. When we decided to do a round with no words or answer box, this seemed like a great opportunity to execute on this idea.

Although I have done quite a few Nikoli-style grid logic puzzles, both in hunts and logic puzzle competitions, I’ve never constructed a full puzzle. Using some advice I got from Galactic’s Anderson Wang a few years ago, I worked forward from an empty board to construct the logic. I learned about the interactions between the various conditions as I went and tried to inject situations that relied on the Go and Gomoku rules to make progress. The Go rules helped restrict the edges of the board; no black pieces in the corner, no two black pieces adjacent along the edge. Towards the center of the board, it was more difficult to build a situation that required the Go rules, especially one that was larger than just a single stone being surrounded. Therefore, in the center, I ended up using more interactions with the Gomoku rules.

Silent Interactions

Given that the puzzle couldn’t have any words or flavor text, I needed to figure out how to convey the rules to the solver. I thought that highlighting a stone or edge in red would be a sufficiently strong indicator that something had gone wrong. In my first version, I set up the code to highlight all rule violations for both stones placed on the board and stones that were implied by the path state. However, in that first playtest, the solvers were able to just guess and check their way through the puzzle, relying on the highlighting to tell them when to stop and reverse course. They never fully understood the rules and were still able to complete the puzzle in about an hour. To prevent this from happening, I removed the highlighting for implied stones and added a stone bank to the puzzle to indicate to teams that they needed to place additional stones on the board.

Technical Implementation

Up to a couple weeks before the hunt, this puzzle was written in a mess of Javascript without any attempt at obfuscation. When it came time to port this onto the website, the dev team discussed our options. Both ⚫ and 🔲 had similar requirements. We wanted users to get immediate feedback as opposed to having to click a button everytime they wanted to check the validity of a board state. For ⚫, we definitely didn’t want teams to share a single board state because logic puzzles aren’t conducive to multiple people working on the same copy simultaneously. One option that was considered was to implement these two puzzles using serverless functions, however, we weren’t sure how much this would end up costing given the pricing structure. In retrospect, we probably should have done more due diligence exploring this route to at least get an estimate of the possible cost before we abandoned it.

We ended up deciding to leave most of the code on the client side and just obfuscate all of the interesting bits and pieces. Unlike 🔲, I didn’t need to check for correctness by comparing each element against a solution. Instead, I was able to verify correctness using the Masyu, Go, and Gomoku conditions so to obfuscate the code, I just needed to obfucsate these correctness functions.

Adding Some Excitement to the First Few Hours of the Hunt

Early on in a hunt, there isn’t all that much for the hunt staff to do. Expert teams don’t have hint requests yet and given our start time on a weekday, many teams probably hadn’t even started yet. We are just sitting around on Discord watching as puzzles get their first solves and debating whether teams are going faster or slower than expected. Having this experience from the last two Huntinalities, I made sure to give the team something to focus on during these first few hours. <sarcasm in case that wasn’t clear>

As teams started on The Trial of Silence round, we started seeing some incorrect answers come through for this puzzle. If my code was working correctly, this should have been impossible. We should only have been submitting an answer if all of the condition checks passed. Furthermore, since we were applying a hash to the board state string, all we were seeing in our database was a 16 character alphabetic string with no reasonable way to reverse engineer the board state. However, since this board state wasn’t being marked as correct and there was no indication on the puzzle page that an answer had even been submitted, this problem was mostly invisible to teams. The biggest risk was that a team would somehow manage to submit 20 unique incorrect guesses and then unknowingly get locked out of the puzzle. We were keeping track of the wrong guesses per team and no one ever got that close to exhausting the limit, but we couldn’t monitor it closely for the entire hunt so we started looking into fixes.

At this time, we also started getting a couple of reports of teams being shown the correct answer. For reasons discussed below, we had set up the puzzle to display the completed grid upon page load if the team had already solved the puzzle. Our first hypothesis was that someone on these teams had correctly solved the puzzle unbeknownst to their teammates. However, we got at least one screenshot that showed a page state that I thought was impossible; the board solved and no 🎉 above the page indicating completion.

Within an hour, Dan was able to recreate some of the wrong answers which were caused by gaps in my solution verification logic, but that still didn’t explain the reports of teams getting shown the solution. Given that ⚫ and 🔲 were implemented similarly, we were getting concerned that we’d start hearing similar reports about 🔲. Still without a clear understanding of how the solution was leaking to teams, we decided to do two things.

- Before sending an answer submission to our backend, verify that the first 4 characters of the 16 character hash were correct. Although this exposed the first 4 characters of the solution string, it was still impossible for teams to use this to gain any sort of advantage or insight into the puzzle.

- Remove the feature that loaded the completed board state to teams that had completed the puzzle. This was the only place in the code that contained the correct board state so we figured that once we removed it, it should be impossible for that to get rendered to teams. (Even our database didn’t have a representation of the correct board state.)

These two changes were sufficient to fix both problems. However we still didn’t understand why the answer was being leaked, so there was still some uncertainty as to whether the problem was truly resolved. Over the next couple hours, the dev team managed to recreate the problem locally and demonstrate that this was happening across teams. Once we discovered this and he started to review my code, Peter had a good sense as to what was happening. Peter’s Puzzle Postmortem Passage below goes into more detail about what happened. He ended up implementing a proper fix, but given that the hunt appeared to be going smoothly and we didn’t want to risk it, we ended up not deploying it or any fixes to the solution verification logic.

Max’s Muddled Mess

I’ll be the first to admit that I did not test this code well enough. Given my knowledge of our tech stack, I think it would have been very unlikely that I would have caught the issue with the shared execution environment giving away the answer for free, but I should have caught the bugs in my answer checking logic.

Given what we can discern from our logs and the root cause of the issue, we suspect that no more than 25 teams were accidentally shown the solution before we pushed our fix. Because of the conditions needed to trigger this event, the real number is likely much smaller and many of those teams had already made significant progress toward the correct solution. Thank you to the teams that reached out informing us of the unusual behavior and providing us with screenshots. My apologies for the confusion and for breaking the silence by having to issue an errata on this puzzle. Props to our dev team for working through this issue together and quickly deploying a fix, especially to Peter for figuring out the root cause of the problem and helping put our minds at ease that we had actually patched the issue.

Peter’s Puzzle Postmortem Passage

In the first hours of the hunt, we started getting messages that this puzzle was occasionally opening to a state where the final solution was being shown to the solver, in spite of their team having not solved the puzzle to begin with.

This was a result of two decisions

- We wanted teams who have solved the puzzle to still have access to their solved state for future reference

- We wanted to keep the the state of the puzzle serializable as a string so solvers could save their states and send them to us in hints and stuff.

The natural way to implement this was to fill in the solved string when the puzzle information returned the fact that the puzzle was solved, no problem right?

The thing that was missed was that the puzzle state was being kept in a global variable. The variable gets initialized with the puzzle’s default state, then gets modified during the initial render to contain the solved state. The trouble is that unbeknownst to us, Vercel (our frontend host) reuses execution environments. During the initial render for a team who’d solved the puzzle we’d overwrite the allocated variable, then when another request arrived in close proximity to the first, Vercel would run the initial render again and we’d send them the contents of that variable as their default state.

The crucial missing piece here was a difference between server-rendered React and traditional static JavaScript - if we had a variable declared in the global scope of static JavaScript, we’d have no problems, that variable would only ever exist in memory on the solver’s computer. However, once we start rendering on a server, those variables get initialized in memory in a process that effectively gets shared across users.

Overall this was a pretty cool problem to diagnose, you rarely get to have satisfying ahas when you’re on the team running the hunt.